TaskingAI is a Backend as a Service (BaaS) platform that is designed to make development and deployment of LLM-based agents easier. TaskingAI is an umbrella solution for doing things with hundreds of large language models (LLMs) and working with AI application building blocks like tools, retrieval-augmented generation (RAG) systems, assistants, and conversation history. TaskingAI is designed to emphasize scalability and flexibility, closing the gap between prototyping and production, and giving developers a single interface to use to develop and deploy AI-native apps effectively.

What Does It Do?

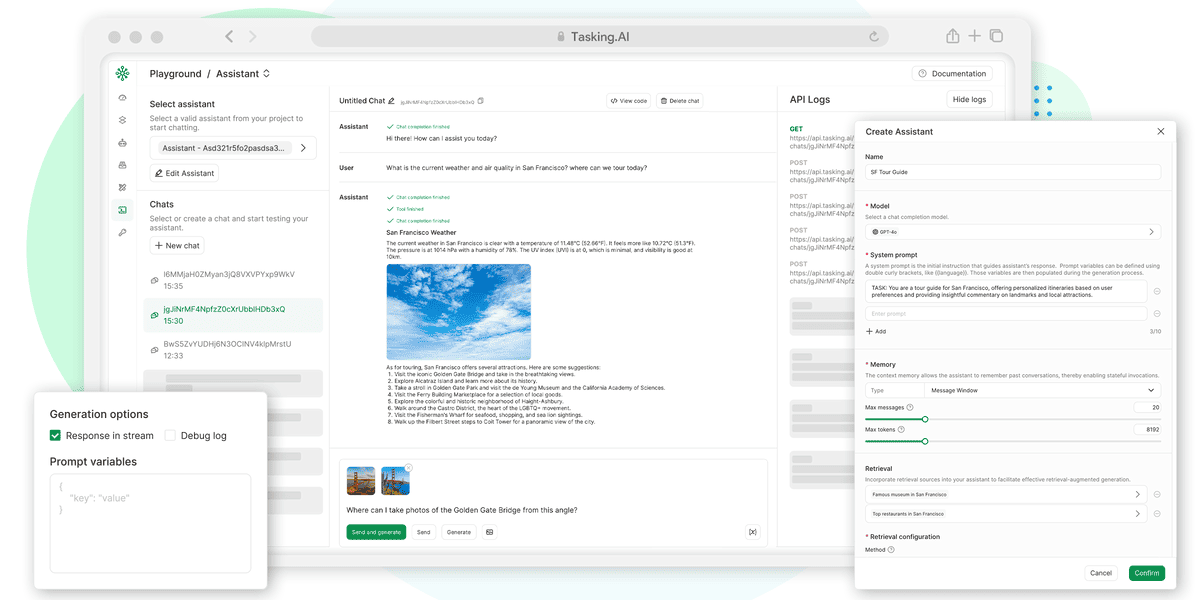

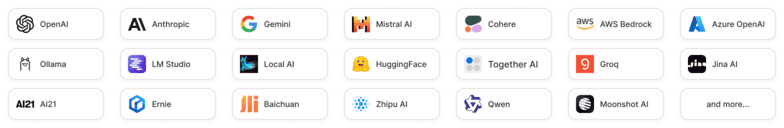

TaskingAI combines LLM workflows by providing single APIs for hundreds of AI models, such as OpenAI and Anthropic, and local models via Ollama and LM Studio. Its modular design isolates AI logic (server-side) from client-side development so that developers can prototype in-console and scale via RESTful APIs or SDKs. Key functionalities include pluginization with custom plugins (for example, Google Search, stock exchange fetch), enterprise-grade RAG systems, and support for multi-tenancy in enterprise software. It also has a one-click deployment mechanism, asynchronous execution with Python FastAPI for optimal performance, and simple UI for workflow testing and management of projects. Applications range from interactive proofs to enterprise productivity software and multi-tenant business applications.

Pricing

The project is licensed under : Apache-2.0 license.

Summary

TaskingAI occupies the role of an adaptable BaaS platform for applications powered by LLMs, solving the critical pain points of scalability, modularity, and vendor lock-in. With model access consolidation, component decoupling, and deployment optimization, it targets developers building enterprise-level or multi-tenant AI applications. Lacking clear statements regarding pricing and background information, its focus on flexibility, performance, and workflows makes TaskingAI a prime choice for teams concentrating on quick development and scalability for LLM projects.

| Pros | Cons | Unique Features | Pricing | Social Media |

|---|---|---|---|---|

|

|

|

|

|